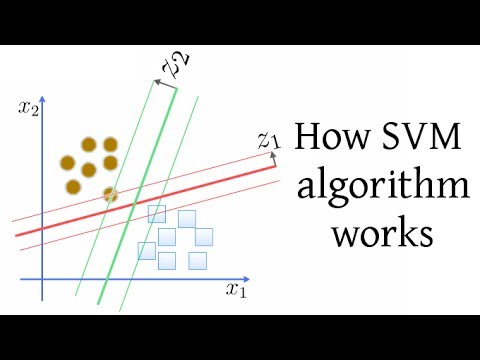

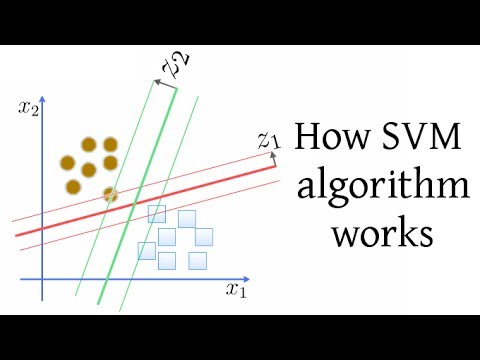

SVM(サポートベクターマシン)アルゴリズムの仕組み (How SVM (Support Vector Machine) algorithm works)

dœm が 2021 年 01 月 14 日 に投稿  この条件に一致する単語はありません

この条件に一致する単語はありませんUS /ˈkɑnstənt/

・

UK /'kɒnstənt/

- n. (c./u.)条件;期間;学期;用語;関係;項;妊娠期間;任期

- v.t.称する

- n. (c./u.)大きさや格など;うろこ;はかり;音階;規模

- v.t./i.(縮尺比に従って)拡大する : 縮小する;登る;魚のうろこを取る

- adj.決別した;割れた;対立の

- v.t./i.真っ直ぐに切って分けられる;割れる;対立する;分割する;公平に分ける

- n. (c./u.)対立;分離

エネルギーを使用

すべての単語を解除

発音・解説・フィルター機能を解除