字幕と単語

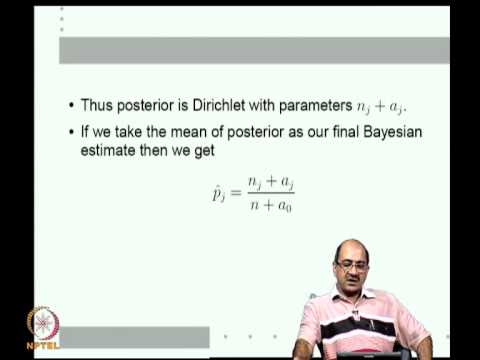

Mod-03 Lec-08 ベイズ推定の例; 指数系列の密度とML推定 (Mod-03 Lec-08 Bayesian Estimation examples; the exponential family of densities and ML estimates)

00

aga が 2021 年 01 月 14 日 に投稿保存

動画の中の単語

square

US /skwɛr/

・

UK /skweə(r)/

- n. (c./u.)正方形 : 四角;縦x横の答え;広場

- adj.正直な;平方の: 二乗の

- adv.正直に

- v.t.同点にする : 清算する;二乗する;四角をつくる

A2 初級TOEIC

もっと見る エネルギーを使用

すべての単語を解除

発音・解説・フィルター機能を解除