字幕と単語

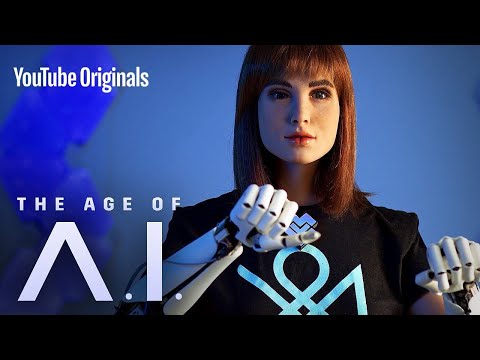

A.I.はいかにして宇宙人を探しているのか|A.I.の時代 (How A.I. is searching for Aliens | The Age of A.I.)

00

Takaaki Inoue が 2021 年 01 月 14 日 に投稿保存

動画の中の単語

recognize

US /ˈrek.əɡ.naɪz/

・

UK /ˈrek.əɡ.naɪz/

- v.t.(~が本当であると)認める : 受け入れる;(重要性を)認める;法的権威を尊重する;公にその人の貢献を称賛する;認識する、認知する

A2 初級TOEIC

もっと見る エネルギーを使用

すべての単語を解除

発音・解説・フィルター機能を解除