字幕と単語

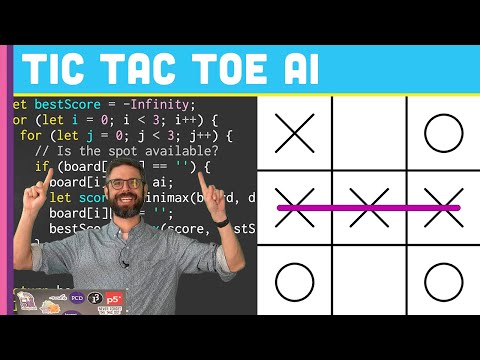

コーディングチャレンジ154ミニマックスアルゴリズムを用いたTic Tac Toe AI (Coding Challenge 154: Tic Tac Toe AI with Minimax Algorithm)

00

林宜悉 が 2021 年 01 月 14 日 に投稿保存

動画の中の単語

negative

US /ˈnɛɡətɪv/

・

UK /'neɡətɪv/

- n.マイナスの電極;否定文の;「いや」という返事;写真や映画のネガ

- adj.嫌な;負の数の;悲観的な;否定的;陰性の;負の

A2 初級

もっと見る エネルギーを使用

すべての単語を解除

発音・解説・フィルター機能を解除