字幕と単語

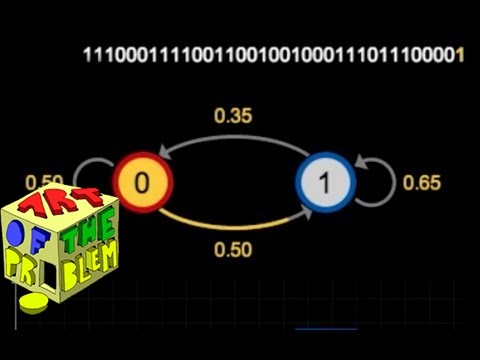

マルコフ連鎖(コインの言語:11/16 (Markov chains (Language of Coins: 11/16))

00

Lypan が 2021 年 01 月 14 日 に投稿保存

動画の中の単語

light

US /laɪt/

・

UK /laɪt/

- v.t.火をつける;点灯する : (照明を)つける

- adj.明るい;薄い;軽い;軽い

- n. (c./u.)光;明瞭化;光;照明;信号;表情

- adv.(旅行をするときに)荷物を少なくして

A1 初級

もっと見る エネルギーを使用

すべての単語を解除

発音・解説・フィルター機能を解除