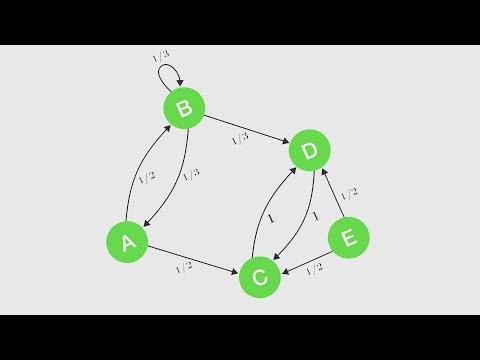

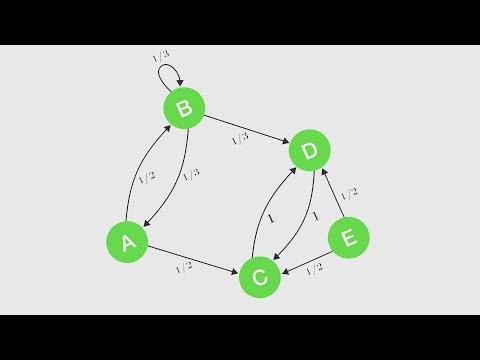

マルコフ鎖 (Markov Chains)

林宜悉 が 2021 年 01 月 14 日 に投稿  この条件に一致する単語はありません

この条件に一致する単語はありませんUS /ˈɛpɪˌsod/

・

UK /'epɪsəʊd/

US /ɪmˈpruv/

・

UK /ɪm'pru:v/

US /dɪˈlɪbərɪtlɪ/

・

UK /dɪˈlɪbərətli/

US /ˈbrɪljənt/

・

UK /'brɪlɪənt/

- adj.才気ある;素晴らしい;輝かしい

- n.ブリリアントカットの宝石

エネルギーを使用

すべての単語を解除

発音・解説・フィルター機能を解除